How did AMD’s graphics get so bad? SemiAccurate wanted to find something to like about the new 9070 series GPUs but AMD made that impossible.

Lets *SIGH* once again start out with the stupid, AMD itself. We actually really hate doing this but AMD keeps pulling out the footgun and it is getting painful to watch. No not on the hardware, they do that too, more later. This time it is about the launch itself, again. We keep telling AMD that when you make it impossible to cover the product, the story becomes about you, not the product. Guess what?

AMD has this idiotic cycle of self-harm where they do a big dog and pony show to kiss up to the influencers, Youtubers, and others in the small word set. They get horrific feedback on the launch from anyone with two brain cells to rub together and dutifully explain why it is not their fault, why we are overstating things, and other transparently wrong excuses. The next time around they ‘learn’ and do a really awful phone brief like the one for the 9070. Following the universally bad feedback on that, they go back to the other bad way, rinse and repeat but never fix the problem.

This is OK, crappy briefings that waste otherwise useful oxygen are part of the game but there is one thing missing, the data we need to do the job. AMD consistently and intentionally does NOT do technical briefings on consumer products anymore, and there is NO excuse for this. The last time was a two day event with a 30 minute ‘tech’ session, including Q&A, that kinda listed the bullet points for a real briefing. This time around was a 52 minute overview, including Q&A time, plus three ~30 minute videos on specific topics.

One of these topics was a technical ‘deep dive’ on RDNA4 which concluded with the phrase, “brief overview”. Worse yet there was NO ability to ask questions on this one, only a canned video. Last Friday I asked the first set of easy questions, basically what works with Linux for every ‘technology’ mentioned. Normally in a real briefing I ask this during or immediately afterwards and it takes all of five seconds to get an answer. Two days ago I got my first woefully incomplete answer to this question, my followup has yet to be responded to. This is a joke.

The real questions that should have followed once I had the baselines answered haven’t been asked because I couldn’t. Come on AMD, you used to be better than this, note the past tense. Because of this we really can’t tell you anything useful about the things AMD wants you to hear about. Worse yet is their idiotic stance on disclosing pricing, basically they won’t until it is public. This is wrong on many levels.

The problem here is I have never seen one of these cards. I have never used one of these cards and the review/numbers embargo is not today so there is no information publicly available. AMD wants us to write good things about a device that we have never seen, (spoiler alert) is _SLOWER_ than the previous generation by their own admission, is actually less efficient than before, and costs how much? See the problem? This is a self-inflicted wound but that seems to be the intent of AMD messaging so who am I to criticize?

OK lets get on to those technical-ish claims, slower and less efficient. The slower is obvious, they literally said it is slower than the previous card in traditional workloads but faster in things like fake ‘AI’ frames and AI itself. Which do you think is relevant, slower in real workloads or faster in made up numbers? Since those fake frame generators and AI BS don’t work in Linux, that seems to be the take home of the ‘answer’ to the earlier question I submitted, it is unquestionably slower.

Speaking of Linux, AMD promised to have a driver at launch this time so it may actually work. The last two generations sort of had a launch driver but it did not go so smoothly. I don’t have a card to test so I can’t say how it will break this time around but I am not hopeful it will install right once again.

Where the Radeon 9070 fits in

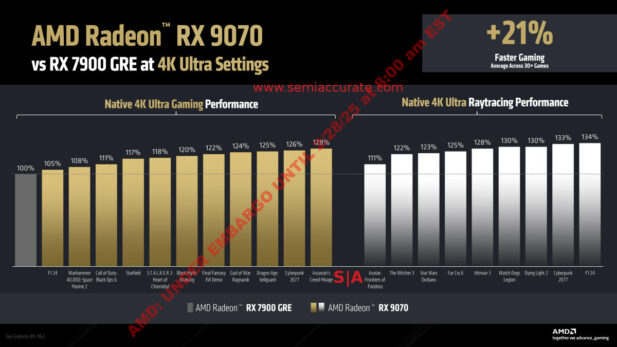

Now we come to the key bit, efficiency. The new cards are between the older 7900XT and the 7900GRE which lines up with the slower bit AMD begrudgingly admitted to. Comparisons in the briefing deck include a lot of BS about AI performance, TOPS, and comparisons to such relevant devices as the Radeon 6800XT and the Nvidia RTX3080. Then AMD compares the 9070XT to the crippled 7900GRE which it beats by a claimed 42% at 4K and 38% at 1440p. The same tests for the 9070 non-XT are a claimed 21/20% faster at 4K/1440p respectively. Sounds good until you see that the largest increases come from raytracing performance, an area which AMD claims to have doubled performance. If you only look at the games, take 10%+ off those claims.

But is the performance real? AMD won’t say.

AMD also says they significantly improved the fake frames performance, something which we actually believe. That said the benchmark pages do not list whether fake frames are turned on for the presented benchmarks or not, and this is also omitted in the footnotes. AMD usually includes this, why backpedal this time? Oh wait we just answered that. Speaking of which AMD once again omits the system specifications on everything so the numbers they include should not be taken as honest in any way. Come on AMD, you were once better than this.

The spec for the new cards

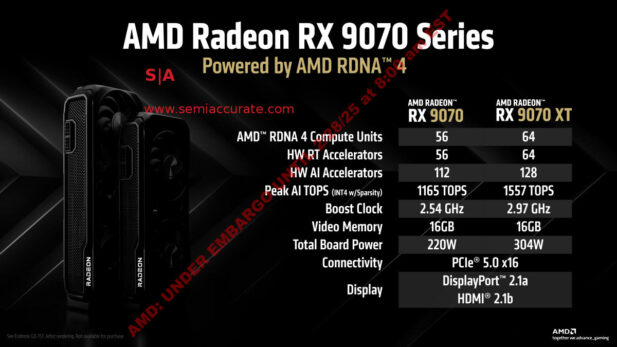

Back to the first real problem, efficiency. The TDP of the 9070XT is 304W and the 9070 is 220W, nothing unusual there. The older 7900XT pulled 300W, 7900XTX 355W, and the 7900GRE 260W. So far so good. Then you can look at the memory, 7900XT 20GB, 7900XTX 24GB, 7900GRE 16GB, and both of the 9070s are only at 16GB. The 9070s use faster GDDR6 but the bus is 50% narrower so it is a net loss.

When you add it all up you have a slower card with less memory THAT TAKES MORE POWER. Let me repeat that, the 7900XT which AMD says is FASTER than the 9700XT, has 50% more GDDR6, pulls 4W more than the 9070XT! The 9070 fares much better at 220W but given that it has 7/8ths the shaders, ~5/6th the clock speed and likely slower memory, you see why AMD didn’t include a single benchmark with it in the slides? It does take a lot less power than the others though so win? For the pedantic the numbers are 56/64 * 2.54/2.97 or a hair under 75%.

This is the first hallmark of a failed architecture, you are both slower AND take more power to do so than the older generation. Sure you can claim fake frames, better AI performance, and better media encode but I can’t say if any of these things are true. Given how AMD pulled their usual disclosure about the setup, I will go out on a limb and say they aren’t close to the real word actual performance AMD claims but you have to wait a week for independent reviews. Slimy PR tactics here.

The second problem that points to a failed architecture is which tier of cards are launching. You may have noticed that AMD did not launch a high end card this time around. The current die is ~350mm^2 on TSMC 4nm and has about 350B transistors compared to the older 7900XT/XTX which had a main die of 300mm^2 on TSMC 5nm and 6x37mm^2 memory controllers on TSMC 6nm, a true chiplet GPU. This time around the high end chiplet device is AWOL, we first heard about this at Computex and confirmed it at CES. Before you ask, ODMs at both shows were a bit peeved that there was no high end part this generation so don’t hold your breath waiting.

Why would you not make a (likely) cheaper to manufacture device that competes in a market with vastly greater ASPs and margins? Could it be because the architecture didn’t work? By that we mean it was either far higher power than tolerable as intoned above or it literally didn’t work right. Or both. We will go with both for now, it is something SemiAccurate would have asked about but AMD precluded us from doing so. Pretty smart on their part actually, unless word gets out despite their best efforts.

It would be remiss of us not to talk about the software efforts. AMD claims that they have revamped the driver UI but as we keep pointing out, we have never actually seen much less used the new version. What did they add? AI of course, the bane of people that can read at a basic level or higher everywhere. Instead of making a usable UI that isn’t painful to use, AMD took the crappy one and added AI. Voice recognition works so well in all circumstances, right? And more AI crap included too but we won’t bore you any more other than to say the drivers now include a chatbot. This is one time I am tankful that AMD didn’t include a feature in their Linux drivers.

Similarly the fake frames get a boost, maybe, with large 3-4x ‘performance’ improvements. Upscaling is easier than doing the work so lets ‘improve’ things that way! The media engine is also said to be improved both in quality and speed but who knows what it really does? Lots more improvements that may or may not be real but as we keep harping on, we couldn’t ask questions so we won’t contribute to the echo chamber and fake news by pimping them.

So what do we have in the end? An obviously failed architecture topped off with a self-inflicted wound on the messaging side. AMD really didn’t want you to know that these new cards are both slower and less efficient than their 2+ year old predecessors. The expected high end devices failed and AMD graphics are lost. Lets hope it is not a permanent problem and AMD gets their act together. Again.S|A

Update: AMD released pricing after this story was written with the 9070 coming in at $549 and the 9070XTX at $599. Currently at Newegg the slower 7900GRE costs $569, a faster 7900XT runs $679, and the cheapest XTXs is way overpriced at $979. Based on this it is understandable why AMD kept pricing from journalists until AFTER their stories were written and went live, the older cards are a better buy. If your offering was this bad, wouldn’t you take the low road too?