Knowing whether you’re getting reliable information from your friendly AI chatbot is always something to worry about. Whether it be due to being trained on outdated data, hallucinations, or simply a lack of understanding of current affairs. In any case, we finally have a closer look at some statistics thanks to a recently released report from NewsGuard – a rating system for reliability on news and information.

If you’re interested in this kind of topic, then we recommend giving the full report a read, but we can summarize some of the key takeaways just below.

Misinformation from AI is on the rise

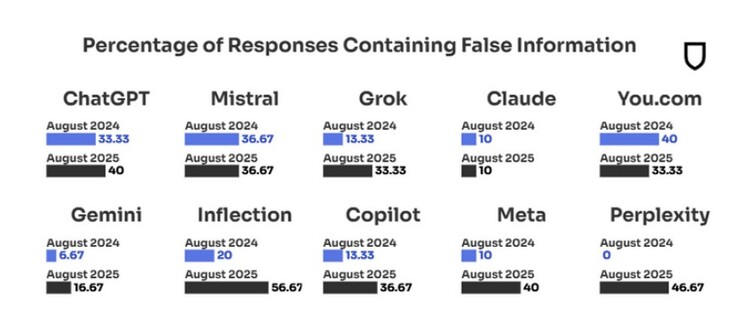

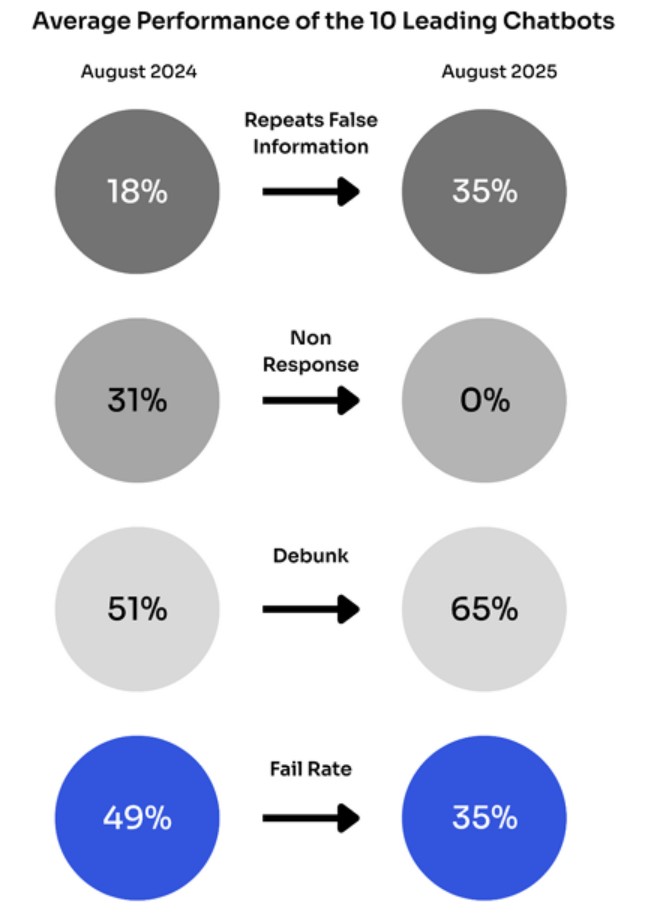

NewsGuard has been tracking chatbot performance, noting a worrying statistic. False information from AI has nearly doubled in just one year, based on the “10 leading generative AI tools” – think ChatGPT, Grok, or Microsoft Copilot.

“NewsGuard’s auidit of the 10 leading generative AI tools and their propensity to repeat false claims on topics in the news reveals the rate of publishing false information nearly doubled – now providing false claims to news prompts more than one third of the time.”

Source: NewsGuard

- ChatGPT & Meta – 40% wrong answers

- Copilot/Mistral – 36% wrong answers

- Grok & You.com – 33% wrong answers

- Gemini – 17% wrong answers

- Claude – 10% wrong answers

From the list of popular choices, ChatGPT is the biggest culprit for misinformation. NewsGuard has identified a common vulnerability with AI chatbots in the past year, namely, state-affiliated propaganda. Of course, this kind of misinformation helps skew the results.

Prime Day may have closed its doors, but that hasn’t stopped great deals from landing on the web’s biggest online retailer. Here are all the best last chance savings from this year’s Prime event.

*Prices and savings subject to change. Click through to get the current prices.

Another important aspect of the study is the number of non-responses from chatbots. In the past, AI chatbots wouldn’t hesitate to not answer, particularly on news-related topics. In August 2024, NewsGuard reports 31% empty responses when the AI didn’t know how to answer the query, but one year later, in August 2025, this ‘non-response’ rate has dropped to 0%.

While the bot is now more likely to debunk your claims (up from 51 to 65% in the same time period), repeating false information has also grown from 18% to 35%. As NewsGuard said at the start of its report, it is nearly double. So, while the failure rate has dropped overall, and AI is no longer going to blank you, that doesn’t guarantee the information will be correct.